In his article DSI Insights on Inside IT, Kilian Sprenkamp sheds light on the potential of large language models (LLMs) such as GPT-4 for the public sector, particularly in the areas of refugee aid and propaganda detection. Using concrete applications, he shows how AI-supported chatbots and analysis tools are already being used in crisis contexts.

The public perception of artificial intelligence (AI) shifted dramatically with the release of ChatGPT in November 2022. While much attention focused on chatbots’ ability to code websites or summarize entire books in seconds, large language models (LLMs) like GPT-4 hold far greater potential. In crisis situations, rapid information processing and effective communication are critical areas where LLMs can play a pivotal role. However, AI technologies also carry risks, raising questions about how public institutions can deploy them responsibly.

Crises generate real-time data from countless sources, overwhelming human capacity for analysis. The Russian-Ukrainian War, for example, demanded swift action from the public sector. LLMs like GPT-4 excel at processing vast datasets efficiently, enabling governments to enhance citizen services and data-driven decision-making. Our research focuses on leveraging LLMs in the Russian-Ukrainian War context, specifically in refugee aid and propaganda detection.

Refugee Aid

Two tools illustrate how LLMs empower displaced populations and support policymakers:

- RefuGPT: A GPT-4-powered Telegram chatbot providing refugees with answers on healthcare, social benefits, and bureaucratic processes. By synthesizing official data and community-sourced information, RefuGPT delivers clear, actionable Q&A-formatted responses, simplifying complex procedures.

- R2G (“Refugee to Government”): This tool aggregates Telegram messages from Ukrainian refugee channels to identify real-time community needs (e.g., housing shortages, healthcare access). R2G uses GPT-4 to generate structured insights for policymakers, enabling NGOs and public institutions to prioritize urgent actions. Future plans include scaling R2G for international refugee organizations to align policies with refugees’ actual needs.

Propaganda Detection

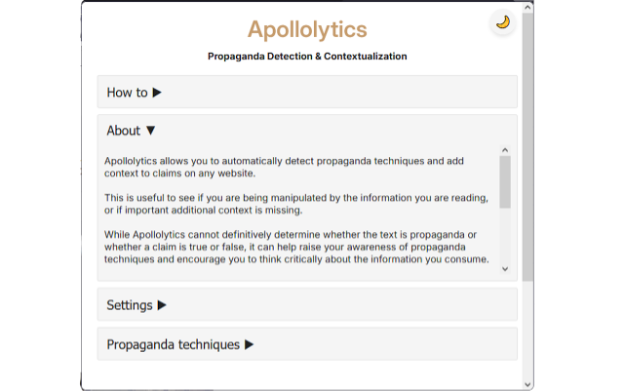

Apollolytics, a browser extension, helps users detect and understand manipulative content in online news. By highlighting text on any webpage, users trigger GPT-4 to analyze the excerpt, flag potential propaganda techniques, and provide brief explanations. Suspect passages are highlighted, alerting readers to subtle manipulation. Beyond detection, Apollolytics generates contextual insights about flagged content, allowing authorities to monitor online discourse and identify disinformation campaigns in real time—enabling swift, transparent responses.

Apollolytics browser extension

Insights for the Public Sector

Three key lessons emerge for deploying LLMs in crises:

- Contextualizing LLMs: Linking LLMs to external data sources improves accuracy and relevance, especially in dynamic scenarios like refugee crises. Similarly, Apollolytics demonstrates how contextualizing LLM outputs (e.g., analyzing propaganda-marked text) enhances utility.

- Choosing LLM Types: Organizations must decide between closed-source LLMs (e.g., OpenAI’s GPT) and open-source alternatives (e.g., Meta’s Llama). Closed-source models offer vast knowledge bases and easy API integration, while open-source LLMs allow customization but require dedicated hosting infrastructure.

- Ethics in Crisis Deployment: Balancing LLM benefits with individual rights is critical. For example, while R2G complies with GDPR, its use raises ethical concerns, such as potential misuse by malicious actors to target refugees. Transparent public dialogue is essential to mitigate risks.

Tools like RefuGPT, R2G, and Apollolytics demonstrate how LLMs can strengthen the public sector’s crisis response through targeted refugee support and effective propaganda detection. To maximize impact, authorities should integrate LLMs with external data for precision, carefully select closed- or open-source models based on needs, and ensure ethical deployment—prioritizing transparency and data protection.

The article refers to the projects “Government as a Platform” (2nd Project Call) and “Propaganda Recognition with AI” (1st Founder Call). Kilian Sprenkamp is involved in both projects.