Project state

closed

Project start

May 2022

Funding duration

9 months

Universities involved

UZH ZHAW

Practice partner

Universitätsspital Zürich

Funding amount DIZH

CHF 72'000

The development of a robust and efficient detection method of video objects in the intensive care unit enables, for example, the detection of nursing intensity at individual beds. This could allow improved deployment planning of nursing staff, and thus specifically avoid their overload.

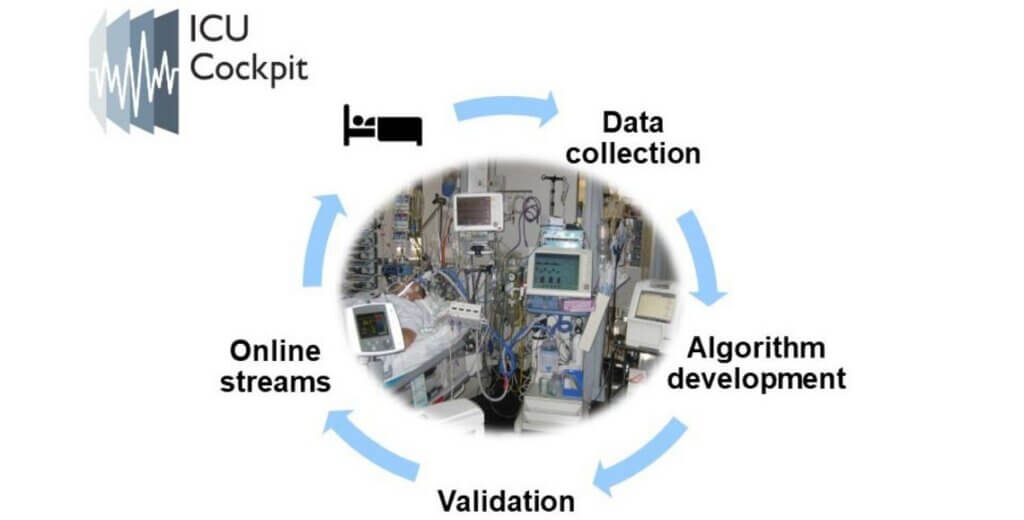

In the intensive care unit, patients are continuously monitored electronically so that an alarm can be raised immediately if, for example, a vital parameter leaves its target range. In the neurosurgical intensive care unit (NIPS) at the University Hospital Zurich (USZ), an average of 700 alarms sound per day and patient. The high number of alarms often places unnecessary demands on medical staff and ties up valuable time. In order to reduce the burden on staff in the future, an IT infrastructure has been set up at the USZ to record monitoring data and to develop digital systems for clinical support. However, it is becoming apparent that these systems are susceptible to artifacts in the measured signals, which can be caused by patient movement or medical interventions. Moreover, such artifacts in themselves are difficult to distinguish from physiological patterns, even for humans. It is much easier, on the other hand, to be right at the bedside and see the context in which a particular signal is measured.

In order to make exactly this context available for the research and development of future support systems and thus make them more resilient, the AUTODIDACT project developed an object recognition system that can extract contextual information from video data. Video data, which is already available on the NIPS, as on many modern intensive care units, because on these units patients are monitored with cameras as a standard.

Contextual information was defined at the beginning of the project as the recognition of the bed, the person being cared for, and the nursing staff and medical equipment. In order to protect the privacy of the patients and the medical staff, the object recognition also had to be based on blurred videos. This then meant that entirely new object recognition software had to be developed, as already trained artificial intelligence (AI) systems for object recognition were only available for high-resolution images.

The anonymized video shows a detected patient, medical equipment and staff. With this information, the measured sensor values, such as pulse, can be understood in the context of what is happening to the patient.

For this purpose, fuzzy video data were first recorded as training data with the consent of patients and annotated in these by humans. Based on these videos, a YOLOv5 model (an AI system for object recognition that is considered a quasi-industry standard) was then trained in a first approach to establish a baseline. In this approach, object recognition is done on the basis of individual images. Although the recognition for blurred images was relatively good, it could be further improved in a second approach. The improvement was achieved by taking into account the time course over several images. For this purpose, the three input channels blue, red, green of the YOLOv5 model were replaced by other image information. The red channel was replaced with the black and white image, the green with the pixel changes between the previous image and the blue with the positions of the previously detected objects. This “trick” then resulted in a model that was much faster to train and also provided more stable and accurate results overall, which also benefited the limited hardware resources.

It is planned to refine the developed models in the structures at the University Hospital, or to train new models on a larger database, in order to further improve the accuracy of object detection.

Team

Dr. Jens Michael Boss, Universität Zürich, Neurosurgery and Intensive Care Medicine

Raphael Emberger, ZHAW School of Engineering, Centre for Artificial Intelligence

Lukas Tuggener, ZHAWSchool of Engineering, Centre for Artificial Intelligence

Prof. Dr. Thilo Stadelmann, ZHAW School of Engineering, Centre for Artificial Intelligence

Daniel Baumann, Universität Zürich, Neurosurgery and Intensive Care Medicine

Marko Seric, Universität Zürich, Neurosurgery and Intensive Care Medicine

PhD Shufan Huo, Universität Zürich, Neurosurgery and Intensive Care Medicine

Dr. Gagan Narula, Universität Zürich, Neurosurgery and Intensive Care Medicine

Practice partner

Prof. Dr. Emanuela Keller, Neurosurgery and Intensive Care Medicine, Universitätsspital Zürich

Further information

- AUTODIDACT in the research database of the ZHAW

-

“Robust and efficient object detection method on video streams developed for critical care medicine”, Post dated March 17, 2023 on ZHAW

Call type: 1. Rapid Action Call